How to use Amorphic APIs?

info

- Follow the steps mentioned below.

- Total time taken for this task: 10 Minutes.

- Pre-requisites: User registration is completed, logged in to Amorphic and role switched

Create a personal access token

- Click on 👤 icon at top right corner -->

Profile & Settings-->Manage Access Tokens. - Click on a ➕ icon at the top right corner.

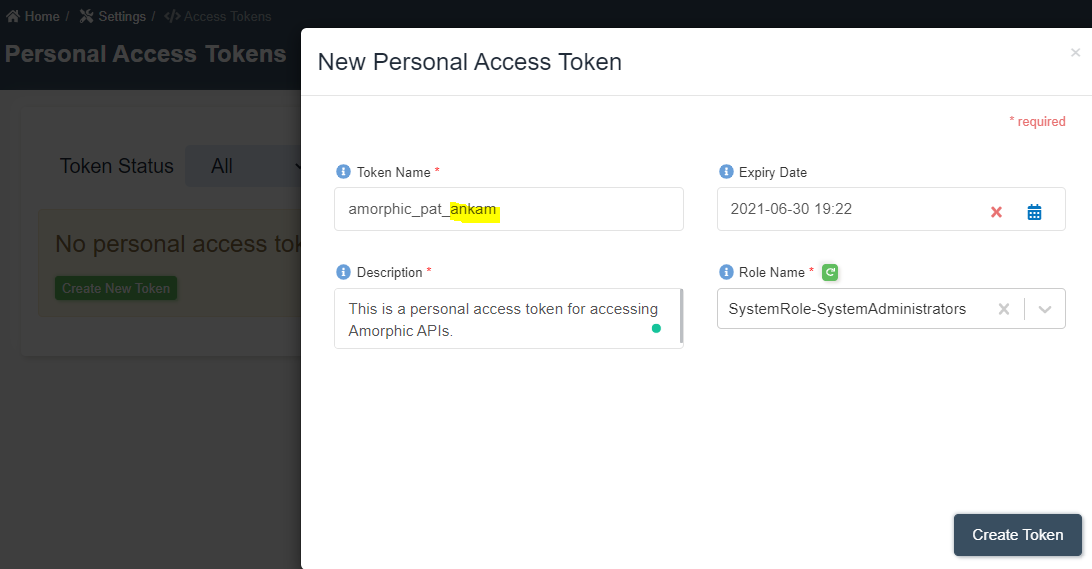

- Enter the following details and click on

Create Token.

Token Name: amorphic_pat_<your_userid>

Expiry Date: Set one week from now.

Description: This is a personal access token for accessing Amorphic APIs.

Role Name: SystemRole-SystemAdministrators or whichever role you have been assigned.

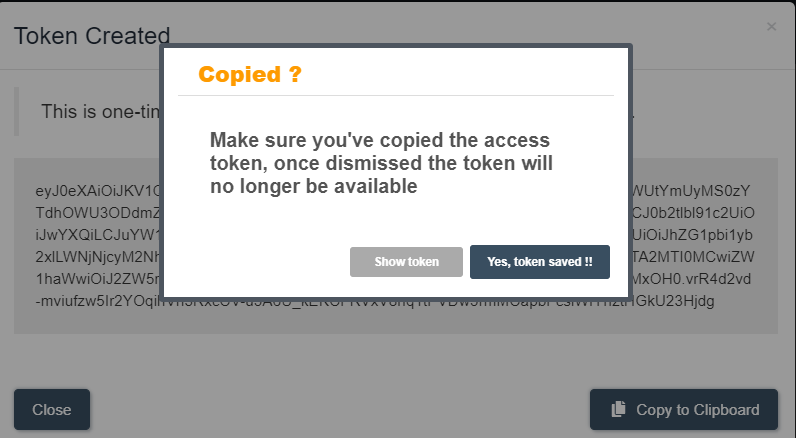

- Once you click

create token, it will show a token. Copy it to your local computer. - When you click

close, it will ask for confirmation. Click onyes, token saved.as shown below.

Access APIs from a local python program.

- You need the following details for your program.

- Click on

Documentationicon at the top right corner and chooseAPI Docsand get theBase URI. Documentation shows API call type and parameters as well. - Click on

Management-->Roleson left navigation-bar. Click onView Detailsof SystemRole-SystemAdministrators role. Copy the Role Id. - Create the following python program and replace amorphic_base_uri, amorphic_token, amorphic_role_id before running it.

import json

import requests

amorphic_base_uri = "https://xxxxxxxxxxx.execute-api.us-east-1.amazonaws.com/wkr"

amorphic_token = "eyJ0eXAiOiJKV1QiLCJhbGciOiJIUzUxMiJ9........"

amorphic_role_id = "admin-role-cc6723ca-........"

headers = {

'Authorization': amorphic_token,

'role_id': amorphic_role_id,

'Content-Type': 'application/json'

}

payload = {

"DatasetName": "create_ds_using_api_<your_userid>",

"DatasetDescription": "This dataset is created using Amorphic APIs",

"DataClassification": ["internal"],

"Domain": "workshop",

"FileType": "csv",

"TargetLocation": "s3",

"Keywords": ["owner:ankamv","API"],

"TableUpdate": "Append",

"ConnectionType": "api"

}

print("Creating a dataset")

url = amorphic_base_uri + "/datasets"

r = requests.post(url, data=json.dumps(payload), headers=headers)

#print(r.text)

parsed = json.loads(r.text)

print(json.dumps(parsed, indent=4, sort_keys=True))

- Once you run it, you get a message "Dataset registration completed successfully" with dataset id.

- Go to dataset details page and verify.

Access APIs from Amorphic ETL job.

- Create a job with job type as 'Python Shell'

- Use same above program and run it.

- Instead of writing personal access tokens in plain text, you may add them to the parameter store under 'Management' from left navigation-bar.

- Access parameters in an ETL job as shown below.

import boto3

## Read parameters from Parameter Store

ssm_client = boto3.client("ssm")

wsaar_param = ssm_client.get_parameter(Name="wsaar-token-1", WithDecryption=True)

wsaar_token = str(wsaar_param["Parameter"]["Value"])

print(wsaar_token)

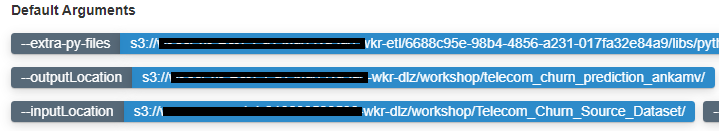

- You may add more 'default arguments' as shown below.

- These arguments can be accessed within the program as shown below.

import boto3

import pandas as pd

from awsglue.utils import getResolvedOptions

## Read job arguments

args = getResolvedOptions(sys.argv, ['inputLocation', 'outputLocation'])

inputLocation = args['inputLocation']

outputLocation = args['outputLocation']

print(inputLocation, outputLocation)

tip

You may use amorphic utils for accessing the API as well. Check the next topic for more details.