Ingestion from Postgres DB to Amorphic

info

- Follow the steps mentioned below.

- Total time taken for this task: 10 Minutes.

- Pre-requisites: Setup Amorphic and login.

Tidbits

- Add your ⚠️userid⚠️ as a suffix to all the resources that you create.

- This will avoid contention with other users as Amorphic maintains a global metadata catalog. 💡

- This is mandatory as multiple users are following these workshop instructions.

Create a connection

- Click on 'Connections' widget on the home screen or click on

INGESTION-->Connectionson the left side navigation-bar or you may also click onNavigatoron top right corner and search forConnections. - Click on a ➕ icon at the top right corner.

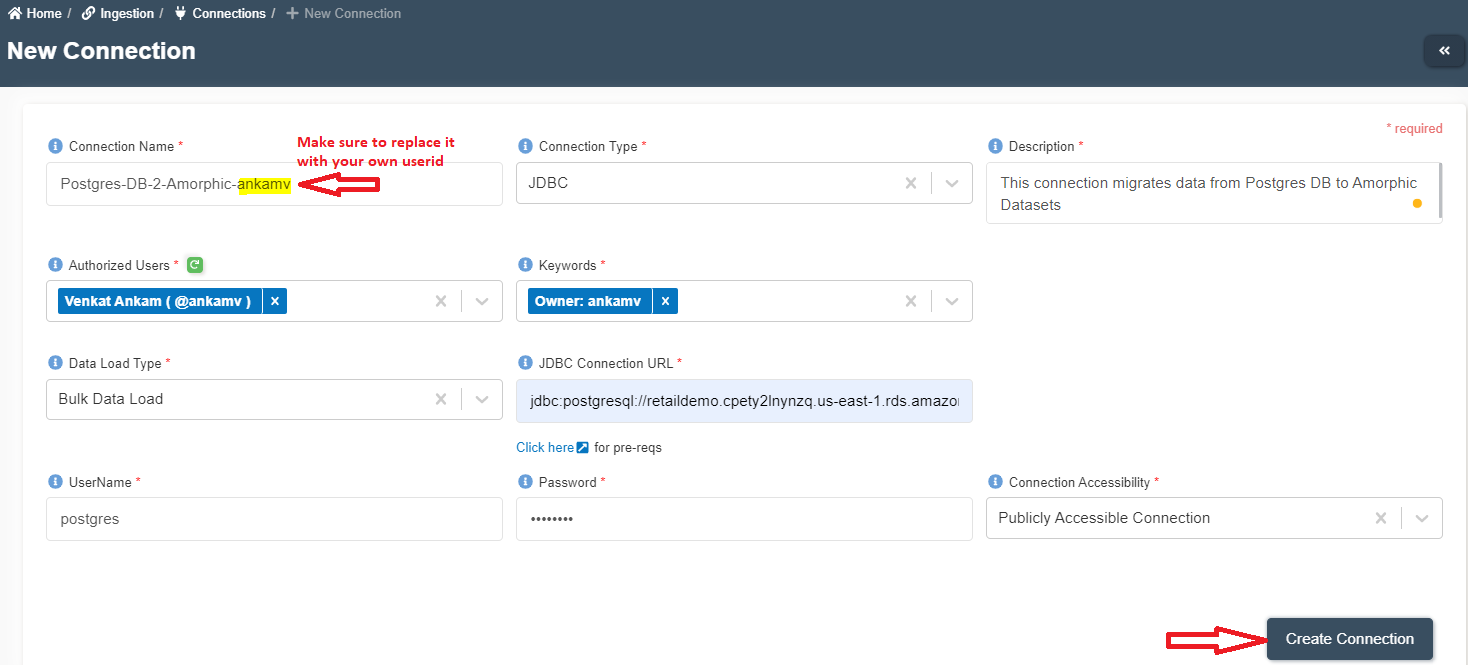

- Enter the following details and click on create connection.

{

"Connection Name": "Postgres-DB-2-Amorphic-<your-userid>"

"Connection Type": "JDBC"

"Description": "This connection migrates data from Postgres DB to Amorphic Datasets"

"Authorized Users": "Select your user name and any other user names you want to grant permission"

"Keywords": "Add relevant keywords like 'Retail'. This will be useful for search"

"Version": "2.0"

"Data Load Type": "Bulk Data Load"

"JDBC Connection URL": "jdbc:postgresql://retaildemo.cpety2lnynzq.us-east-1.rds.amazonaws.com:5432/retail"

"User Name": "postgres"

"Password": "contact admin"

"Connection Accessibility": "Publicly accessible Connection"

}

Create Connection Screen Capture:

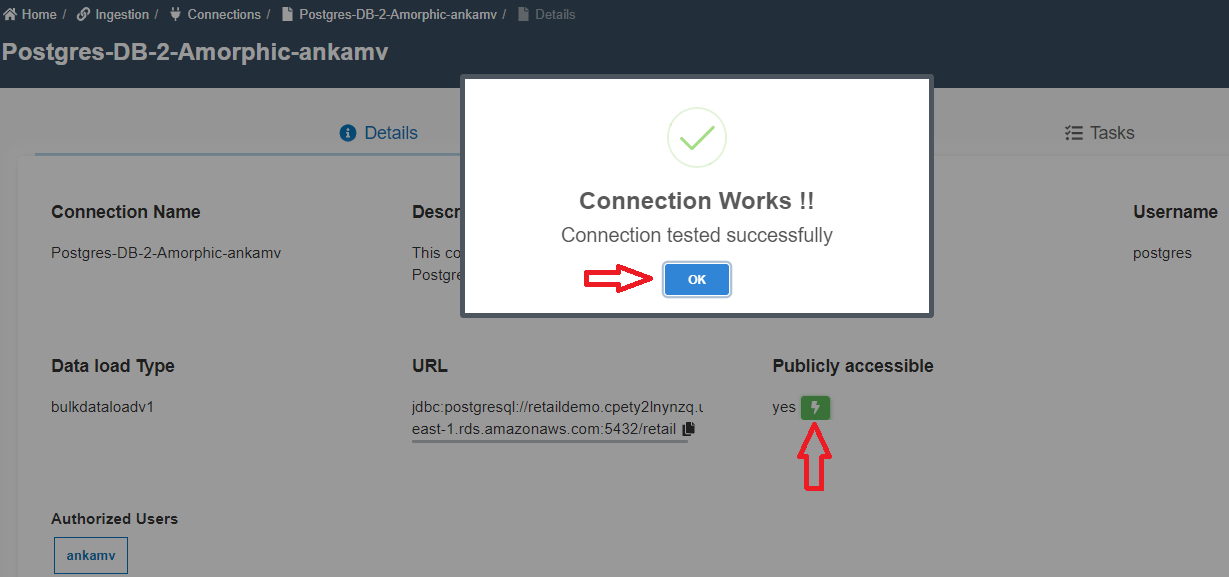

Test Connection

- On the newly created connection page,

- Click on ⚡ icon in 'Publicly Accessible' section.

- It should show "Connection Works" as shown in below image. Click 'OK'.

- If the connection test fails, click on 'Edit Connection' at the top right corner to re-enter the details and click on 'Update'.

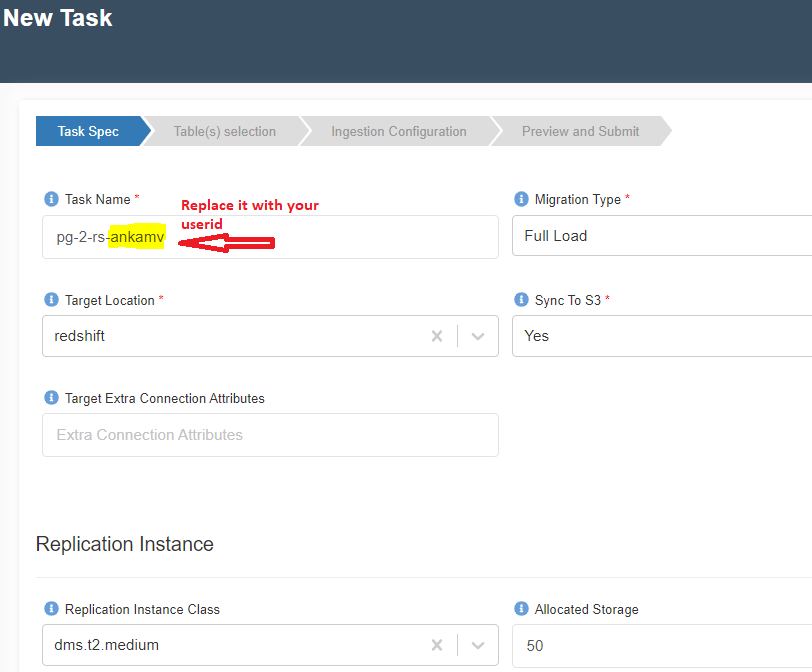

Create a Task

- Click on the 'Tasks' tab. You will see 'No tasks found' or previously created tasks.

- Click on ➕ icon at the top right corner to create a new task.

- Enter the following details and click Next.

{

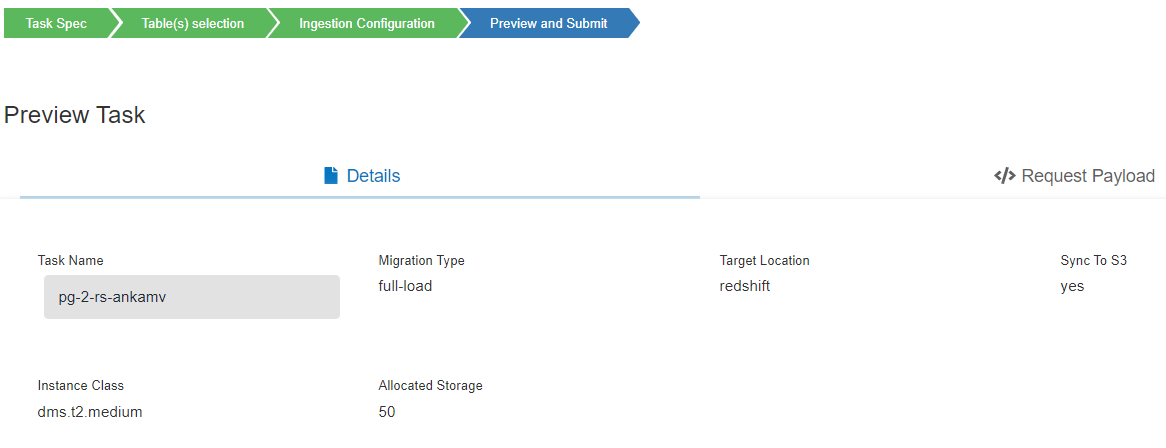

"Task Name": "pg-2-rs-<your-userid>"

"Migration Type": "Full load"

"Target Location": "Redshift" (S3 or S3Athena are ideal for ETL. But, let's use Redshift for this workshop)

"Sync to S3": "Yes"

"Target Extra Connection Attributes": <-- Leave it blank

"Replication Instance Class": "dms.t2.medium"

"Allocated Storage": "50"

}

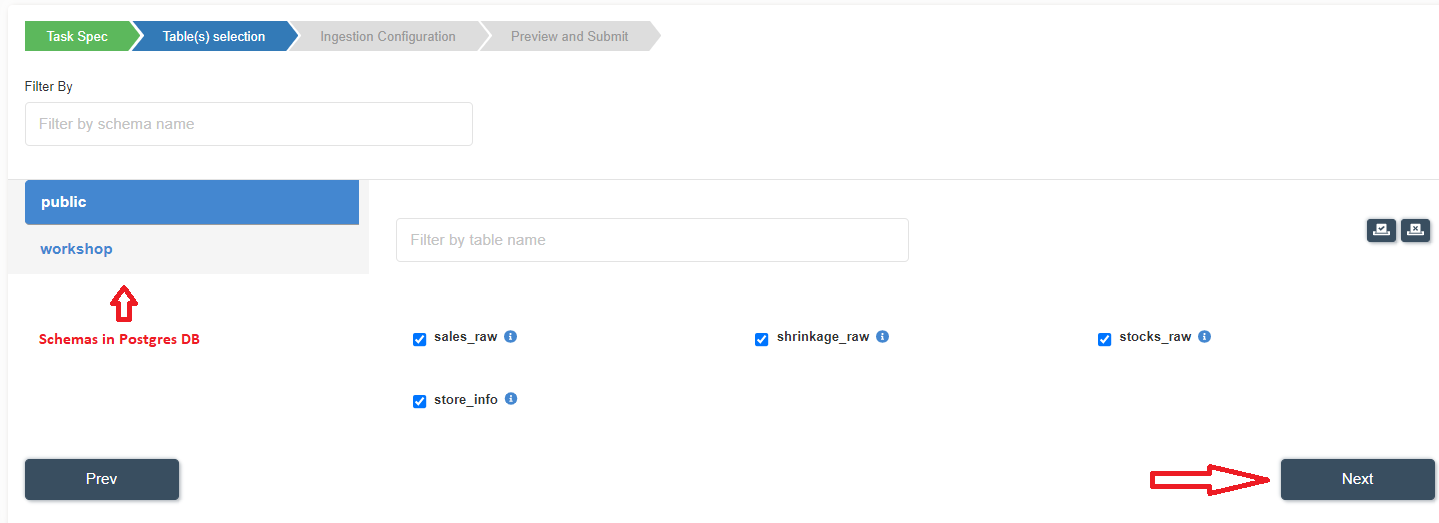

- Select

sales_raw,shrinkage_raw,stocks_raw,store_infotables frompublicschema and click 'Next' as shown below.

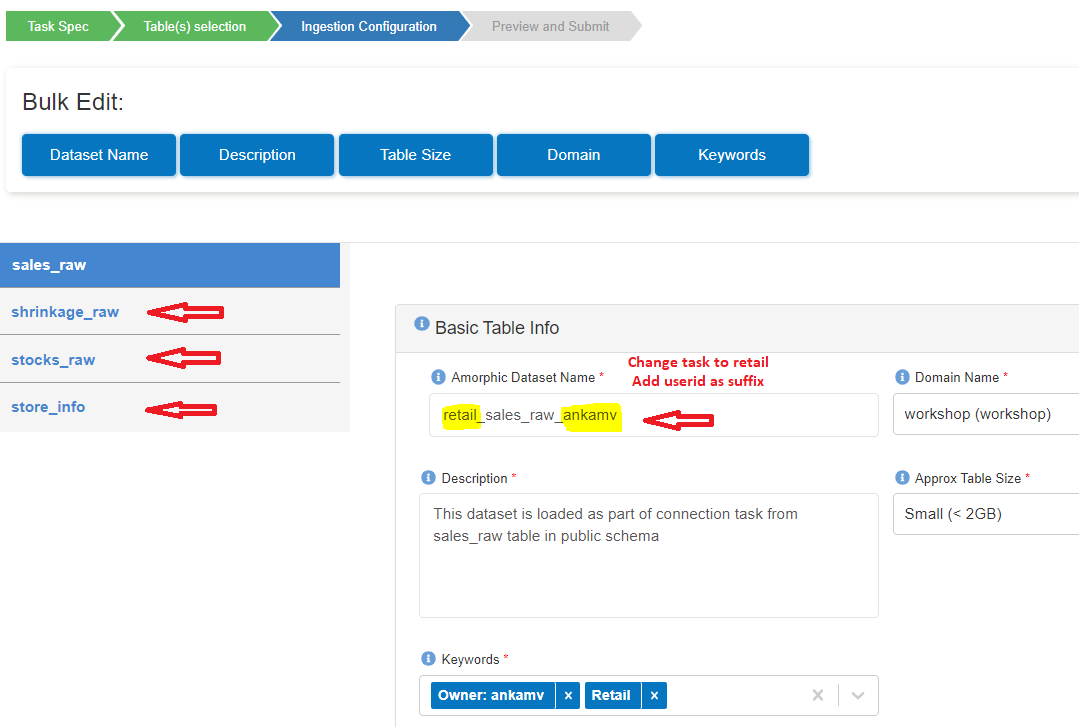

- Change information as mentioned below for all four tables. Tables can be selected by clicking on the table name. You may also choose to bulk edit Dataset Name, Domain and Keywords.

{

"Amorphic Dataset Name": " Change 'Task' to 'Retail'" ; add your userid as suffix ## So, 'Task_sales_raw' will become 'Retail_sales_raw_<your-userid>'

"Domain Name": "workshop (workshop)" ## This will be nothing but a schema in Redshift

"Description": "Keep as it is"

"Approx Table Size": "Small (< 2GB)""

"Keywords": "Add 'Retail'"

}

- ⚠️ Click 'Next' only after changing the above information for all tables as shown below.⚠️

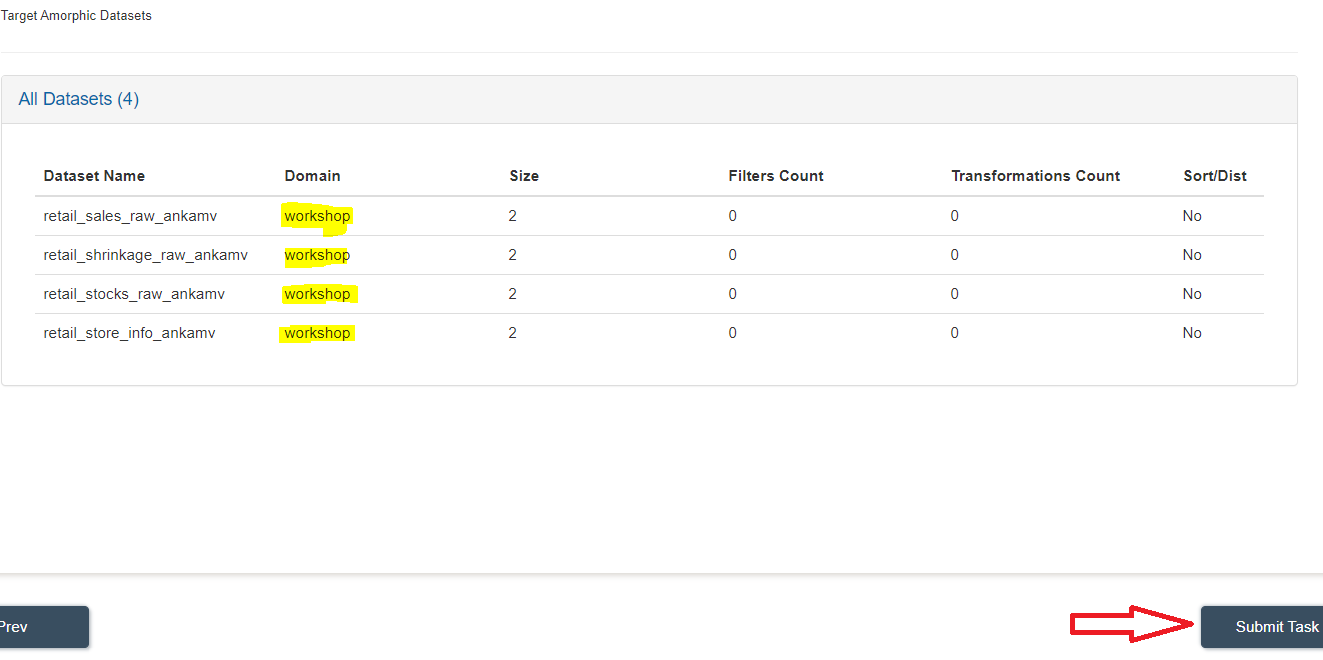

- In the last step, verify the details, datasets and click on 'Submit Task' as shown below.

You will see a message like this -->

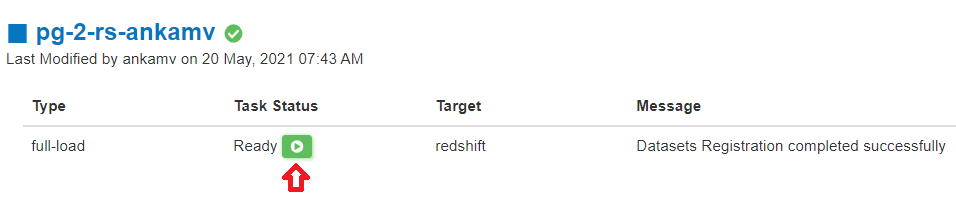

Once the Datasets are registered successfully, you will see a Ready status and a ▶️ button as shown below. If you don't see ready status, click ↩️ icon in front of 'Message'.

- Click on ▶️ button and you will get a message 'Start Task'. Click 'Yes, Start Task' as shown below.

- Refresh the task status by clicking ↩️ icon in front of 'Message'.

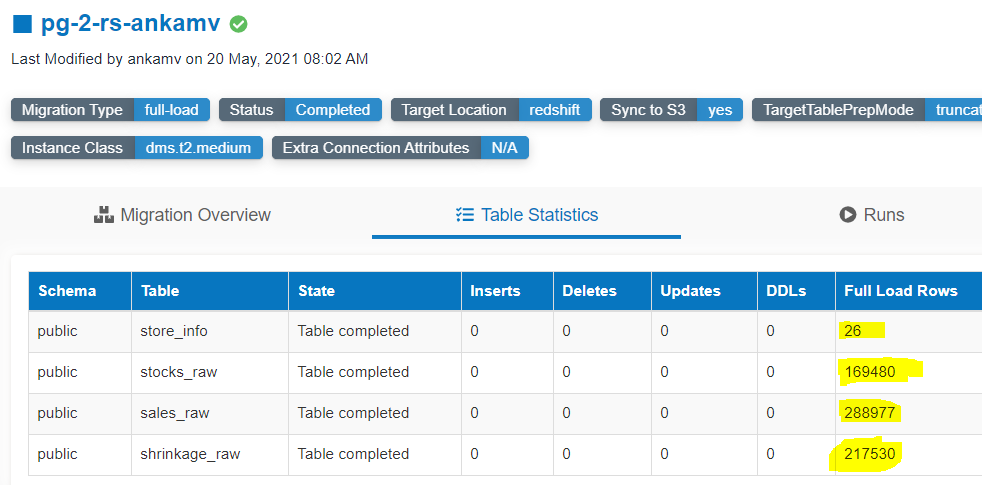

- Once the task status is 'Completed', Click on 👁 'View' icon to check the statistics.

- Click on 'Table Statistics' tab. You will see the stats similar to the below image.

- Now, click on 'DATASETS' --> 'Datasets' from left navigation-bar.

- You will be able to see the datasets created as part of the task.

- Click on 'View Details' of 'retailsales_raw<your_userid>' dataset widget.

- Click on 'Files' tab.

- You will see two CSV files created as part of the migration task.

Congratulations!!!

You have learned how to migrate multiple tables to Amorphic. Now, proceed to 'ETL' task.